It’s been a while since I wrote part one in this series, but don’t be afraid – I didn’t forget that I should write some sequels.

So, you read about how it all started, how it was growing area in the science, about its early achievements and disappointments, who were pioneers and somehow, naturally one question arises by itself – can machines really think? I mean really, really think?

Source Maxpixel.net, CC0 Public Domain

The answer to this question is still an enigma to this day. Why?

I’ll first make one basic distinction and introduce to you two terms: weak (or narrow) AI and strong (or true) AI.

Weak AI is something that doesn’t really think, it’s the system that just simulates thinking and looks intelligent (like some people khm khm). You all know virtual assistants Siri or Cortana – they are good representation of weak AI. You can ask weak AI to switch on the light or to turn on the TV and that system recognizes your command and it will do that, but without really understanding what is the TV and why would you want to turn it on, or what is actually ‘turning on’. It just recognizes the command and knows what action to take because it’s programmed like that, so the person who is maybe looking on the side may think that this is intelligent machine, but it’s not.

You can see one example of this, called Alexa, Amazon’s personal assistant. Don’t ask me what I think about this kind of gadget.

Strong AI is something that is similar to human brain – it can learn, solve problems, understand emotions, understand its environment and so on. There is an interesting example of strong AI in the gaming world – one AI program taught itself to play 49 Atari games and in the game Breakout it outperformed human players after just 2.5 hours of training. As BBC said, in half of those games it was able to beat professional gamers.

To consider one system as intelligent, that system should pass the Turing test, about which I wrote the last time. Today we have an expansion of chatbots and some of them, like porn chatbot Natachata, succeeded to trick users to think that they are chatting with real person instead with the machine, so we can say that chatbots like this passed the Turing test. However, Alan Turing had certain arguments which implicates that even if machines pass the test, still can’t replace humans, like “Argument from various disabilities”:

Turing considers a list of things that some people have claimed machines will never be able to do:

Be kind

Be resourceful

Be beautiful

Be friendly

Have initiative

Have a sense of humor

Tell right from wrong

Make mistakes

Fall in love

Enjoy strawberries and cream

Make someone fall in love with one

Learn from experience

Use words properly

Be the subject of one's own thoughts

Have as much diversity of behavior as a man

Do something really new.

Or the “Argument from consciousness”:

Turing cites Professor Jefferson's Lister Oration for 1949 as a source for the kind of objection that he takes to fall under this label:

Not until a machine can write a sonnet or compose a concerto because of thoughts and emotions felt, and not by the chance fall of symbols, could we agree that machine equals brain—that is, not only write it but know that it had written it. No mechanism could feel (and not merely artificially signal, an easy contrivance) pleasure at its successes, grief when its valves fuse, be warmed by flattery, be made miserable by its mistakes, be charmed by sex, be angry or depressed when it cannot get what it wants.

As we can see, research field of AI is really unique, because it combines not only programming and logic, but also philosophy, psychology, biology, neuroscience and so on. Basically if you want to create a new life, then you should understand life.

Then, what is exactly Artificial Intelligence?

It’s very complex question, because it depends of what are we trying to make – do we need system which will act just like human or we want to make system which should do the right thing in the right situation, as we all know that humans can make mistakes.

So through the history, we had two different ideas – humanistic approach and rationalistic approach. Researchers of these two groups often helped, but also acted disrespectful toward each other.

In humanistic approach, in order to make something that will act like human, we should first learn how humans think, which is pretty hard. There are a lot psychological experiments, brain mapping and philosophical thinking to be done in order to get the picture of human behavior and only then we could start making intelligent system that will act like human.

On the other side, rationalistic approach is based on logic, but it has two problems. First – it’s really hard to pick all informally knowledge and translate it into domain of logic with appropriate notation and second – there’s big difference between solving some problem logically, in theory and solving it in practice. If we temporarily close our eyes on these problems, this approach has one main advantage – its way better for scientific development because it’s standardized and exactly defined and as such can be universally applied.

This approach uses the idea of rational agents and that’s the term that will help us to dive a little bit deeper into this area!

What is an agent?

You all probably watched The Matrix trilogy, but I can bet that there is one thing that you likely didn’t know. Remember all of those agents, led by agent Smith, which are chasing Neo through The Matrix, do you think that it is coincidence that they are agents? No it’s not. It’s one of many brilliant references in the movie and it has the perfect sense.

Simply said, an agent is anything that can take some observation from its environment through sensors and based on that can do some action by its actuators. Basically, we can say that agent has some function that maps observations into actions.

Yeah, we are starting to use geeky terms from now on, but I will try to keep it clearer as I can.

As an example I will mention well-known vacuum cleaner case from previous part. It’s one simple agent which get information through its sensors and has function that says if it’s dirty – SUCK, if it’s clean – MOVE.

It’s the simplest example of an agent and there is one question that you’re maybe asking now – Can agent be good or bad? How could we know can agent do the right thing or it’s stupid?

It depends on four things:

- How we define criteria of success

- Agent’s previous knowledge about the environment

- Actions that agent can do

- Agent’s history of observations

Depending on agent’s intelligence and capabilities, there are five types of agents.

Simple reflex agents

It’s the simplest model and it chooses what action to take depending only on current observations, ignoring previous observations.

Its program simply looks like IF something THEN this action.

Author DDSniper, CC0 1.0

This kind of agent is not so intelligent, because it can work only in fully observable environment and can’t handle unpredictable situations. Also one of disadvantages is that can often fall into infinite loops. I’ll explain it on example.

Remember the vacuum cleaner which I’ve mention before? It’s the simple reflex agent with its function IF dirty THEN suck and IF clean THEN move. This kind of agent doesn’t remember its previous moves so when it comes into clean surface, it doesn’t know if it has cleaned it already, it only sees that it’s clean now so it will move further. With ‘mindset’ like that it can be moving around forever! That’s the example of typical infinite loop.

Model-based reflex agents

This agent has inside some information about the outside world and this information is forming something called model of the world. For example, if we talk about autonomous car as agent, it must know that after turning the wheel on the right, the car will go on the right or if you are traveling to north and you don’t turn right or left anywhere that you are still travelling to north.

Author DDSniper, CC0 1.0

Goal-based agents

This type of agent has information about its final goal.

Author DDSniper, CC0 1.0

If we take autonomous car from the previous example and put it on a crossroad, it’s not just necessary that car know what is a crossroad, it must also know where is its final destination or your ‘goal’ in order to decide what action to take – to go left, right or forward.

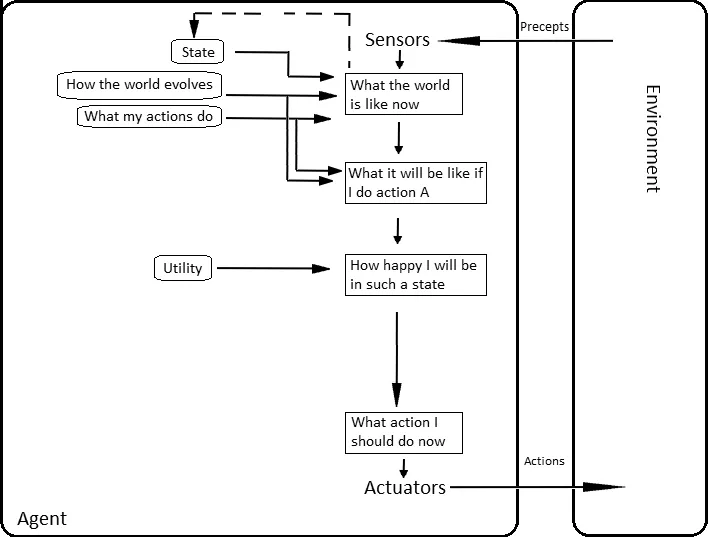

Utility-based agents

It’s not always enough just to know your goal – with your autonomous car you can get from point A to point B by many roads, but those roads are not the same. Some of them are cheaper, shorter, more comfort etc.

Author DDSniper, CC0 1.0

So this agent not only wants to achieve its goal, but it wants to achieve it on the best possible way.

It has utility function that uses data to calculate utility of every action and depending on that function it chooses what to do in order to get the best performance.

Learning agents

In order to call something intelligent, that should have an ability to learn something new.

Imagine previous agents with new ability to train themselves in order to get better performance next time.

Author Utkarshraj Atmaram; Free for use

Learning agent has new, interesting elements to help it during the learning process:

- Learning element – it has feedback signal which works as a critic. If some action is good, that signal will tell that to agent, so it will learn that it’s a good thing and maybe will repeat that action in the future. If it was a bad action, that signal will learn agent to not doing that anymore.

- Performance element – this is an element which was considered earlier as a whole agent. It uses observations from its environment and chose what action to do.

- Problem generator – its job is to suggest new actions that will lead agent to some new information and knowledge.

Now when we scratched under the surface in this area, let’s try to find simple answer to my earlier question for which I’ve said that is pretty complex

Then, what is exactly Artificial Intelligence?

We can say that is a science which job is to design the best possible intelligent agent!

I hope that you enjoyed,

Nikola

Thanks to charming @scienceangel for mentoring me during writing this article!

Sources:

Artificial Intelligence: A Modern Approach, Peter Norvig and Stuart Russell

Wiki weak AI

Wiki strong AI

Weak vs Strong AI

Wiki intelligent agent